optimistic-gold•3y ago

Docker is breaking the crawler bypass

when i'm running the crawler just using nodejs the crawler works perfectly but when using docker i'm getting blocked

11 Replies

@Manuel Antunes just advanced to level 2! Thanks for your contributions! 🎉

try https://crawlee.dev/api/browser-pool/interface/FingerprintOptions with closest match to your localhost environment

optimistic-goldOP•3y ago

Already tried but without success.

This is my current setup.

import { Actor } from 'apify';

import { BrowserName, DeviceCategory, OperatingSystemsName } from '@crawlee/browser-pool';

import { Dictionary, PlaywrightCrawler, RequestOptions } from 'crawlee';

import { LaunchOptions, firefox } from 'playwright';

import { crawlerOptions } from './config.js';

import { router } from './routes.js';

await Actor.init();

const proxyConfiguration = await Actor.createProxyConfiguration({

groups: ['RESIDENTIAL'],

countryCode: 'BR',

});

const crawler = new PlaywrightCrawler({

launchContext: {

launchOptions: {

userAgent: crawlerOptions.userAgent,

cookie: crawlerOptions.extraHeaders.Cookie,

extraHTTPHeaders: crawlerOptions.extraHeaders,

} as LaunchOptions,

userAgent: crawlerOptions.userAgent,

launcher: firefox,

},

proxyConfiguration,

headless: true,

requestHandler: router,

minConcurrency: 4,

useSessionPool: true,

sessionPoolOptions: {

sessionOptions: {

maxUsageCount: 5,

maxErrorScore: 1,

},

},

browserPoolOptions: {

fingerprintOptions: {

fingerprintGeneratorOptions: {

browsers: [{ name: BrowserName.chrome, minVersion: 87 }],

devices: [DeviceCategory.desktop],

operatingSystems: [OperatingSystemsName.windows],

locales: ['en-US', 'en', 'pt-BR', 'pt'],

screen: {

minHeight: 1080,

minWidth: 1920,

maxHeight: 1080,

maxWidth: 1920,

},

},

},

},

});

const requests:RequestOptions<Dictionary>[] = Array.from({ length: 4 }, (_, i) => {

return {

url: `${crawlerOptions.virtualFootballUrl}`,

label: `league`,

uniqueKey: `league-${i + 2}`,

userData: {

league: i + 1,

},

};

});

await crawler.run(requests);

await Actor.exit();

import { Actor } from 'apify';

import { BrowserName, DeviceCategory, OperatingSystemsName } from '@crawlee/browser-pool';

import { Dictionary, PlaywrightCrawler, RequestOptions } from 'crawlee';

import { LaunchOptions, firefox } from 'playwright';

import { crawlerOptions } from './config.js';

import { router } from './routes.js';

await Actor.init();

const proxyConfiguration = await Actor.createProxyConfiguration({

groups: ['RESIDENTIAL'],

countryCode: 'BR',

});

const crawler = new PlaywrightCrawler({

launchContext: {

launchOptions: {

userAgent: crawlerOptions.userAgent,

cookie: crawlerOptions.extraHeaders.Cookie,

extraHTTPHeaders: crawlerOptions.extraHeaders,

} as LaunchOptions,

userAgent: crawlerOptions.userAgent,

launcher: firefox,

},

proxyConfiguration,

headless: true,

requestHandler: router,

minConcurrency: 4,

useSessionPool: true,

sessionPoolOptions: {

sessionOptions: {

maxUsageCount: 5,

maxErrorScore: 1,

},

},

browserPoolOptions: {

fingerprintOptions: {

fingerprintGeneratorOptions: {

browsers: [{ name: BrowserName.chrome, minVersion: 87 }],

devices: [DeviceCategory.desktop],

operatingSystems: [OperatingSystemsName.windows],

locales: ['en-US', 'en', 'pt-BR', 'pt'],

screen: {

minHeight: 1080,

minWidth: 1920,

maxHeight: 1080,

maxWidth: 1920,

},

},

},

},

});

const requests:RequestOptions<Dictionary>[] = Array.from({ length: 4 }, (_, i) => {

return {

url: `${crawlerOptions.virtualFootballUrl}`,

label: `league`,

uniqueKey: `league-${i + 2}`,

userData: {

league: i + 1,

},

};

});

await crawler.run(requests);

await Actor.exit();

Can you please provide link to your run on the Apify platform? What is the target website?

We will try to check it deeper.

Thanks.

optimistic-goldOP•3y ago

export const crawlerOptions = {

extraHeaders: {

Accept: '*/*',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language':

'pt-BR,pt;q=0.9,en;q=0.8,en-GB;q=0.7,en-US;q=0.6',

Connection: 'keep-alive',

// eslint-disable-next-line max-len

Cookie: 'rmbs=3; aps03=cf=N&cg=1&cst=0&ct=28&hd=N&lng=33&oty=2&tzi=16; cc=1; qBvrxRyB=A45IeaqEAQAAWy947jhK5mBXhZKpg0wmrWpF8YvPIs8kAW3F66ISs9f9JVHIAbtW4TWucm46wH8AAEB3AAAAAA|1|1|95c2dbb2f3558a5131fcb4a3ed6ce2c4fa54a062; _ga=GA1.1.734257009.1669307460; _ga_45M1DQFW2B=GS1.1.1669307459.1.1.1669309006.0.0.0; __cf_bm=.1bioOUncIlhLl66vFpebwEu64aQgKMpmaHf4CurRZc-1673977339-0-AeuOKVMdXQmfrz6vbkvSBxvqGq1Y/U+XQPRpDpMcBbhyYIM5vIcWgPiYlDsMT2yL3zCRMpCQQuJFfcUloPxbuRU=; pstk=689A9290987C448FB07976BB33B1B4DF000003; swt=AbtPT2kmQ9ifaI6zOZfiKAovxtDVn+ZH4oIEsrB7xXPDdRLIEdT9jdKP0EdzUs4Vna7rxfCNaqjLULJ3Q8YFrcFtrBlulVQA3lWAH3REt51Mck3v',

},

userAgent: 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4240.198 Safari/537.36',

virtualFootballUrl: 'https://www.bet365.com/#/AVR/B146/R^1/',

};

export const crawlerOptions = {

extraHeaders: {

Accept: '*/*',

'Accept-Encoding': 'gzip, deflate, br',

'Accept-Language':

'pt-BR,pt;q=0.9,en;q=0.8,en-GB;q=0.7,en-US;q=0.6',

Connection: 'keep-alive',

// eslint-disable-next-line max-len

Cookie: 'rmbs=3; aps03=cf=N&cg=1&cst=0&ct=28&hd=N&lng=33&oty=2&tzi=16; cc=1; qBvrxRyB=A45IeaqEAQAAWy947jhK5mBXhZKpg0wmrWpF8YvPIs8kAW3F66ISs9f9JVHIAbtW4TWucm46wH8AAEB3AAAAAA|1|1|95c2dbb2f3558a5131fcb4a3ed6ce2c4fa54a062; _ga=GA1.1.734257009.1669307460; _ga_45M1DQFW2B=GS1.1.1669307459.1.1.1669309006.0.0.0; __cf_bm=.1bioOUncIlhLl66vFpebwEu64aQgKMpmaHf4CurRZc-1673977339-0-AeuOKVMdXQmfrz6vbkvSBxvqGq1Y/U+XQPRpDpMcBbhyYIM5vIcWgPiYlDsMT2yL3zCRMpCQQuJFfcUloPxbuRU=; pstk=689A9290987C448FB07976BB33B1B4DF000003; swt=AbtPT2kmQ9ifaI6zOZfiKAovxtDVn+ZH4oIEsrB7xXPDdRLIEdT9jdKP0EdzUs4Vna7rxfCNaqjLULJ3Q8YFrcFtrBlulVQA3lWAH3REt51Mck3v',

},

userAgent: 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/87.0.4240.198 Safari/537.36',

virtualFootballUrl: 'https://www.bet365.com/#/AVR/B146/R^1/',

};

# Specify the base Docker image. You can read more about

# the available images at https://crawlee.dev/docs/guides/docker-images

# You can also use any other image from Docker Hub.

FROM apify/actor-node-playwright-firefox:16 AS builder

# Copy just package.json and package-lock.json

# to speed up the build using Docker layer cache.

COPY --chown=myuser package*.json ./

# Install all dependencies. Don't audit to speed up the installation.

RUN npm install --include=dev --audit=false

# Next, copy the source files using the user set

# in the base image.

COPY --chown=myuser . ./

# Install all dependencies and build the project.

# Don't audit to speed up the installation.

RUN npm run build

# Create final image

FROM apify/actor-node-playwright-firefox:16

# Copy just package.json and package-lock.json

# to speed up the build using Docker layer cache.

COPY --chown=myuser package*.json ./

# Install NPM packages, skip optional and development dependencies to

# keep the image small. Avoid logging too much and print the dependency

# tree for debugging

RUN npm --quiet set progress=false \

&& npm install --omit=dev --omit=optional \

&& echo "Installed NPM packages:" \

&& (npm list --omit=dev --all || true) \

&& echo "Node.js version:" \

&& node --version \

&& echo "NPM version:" \

&& npm --version \

&& rm -r ~/.npm

# Copy built JS files from builder image

COPY --from=builder --chown=myuser /home/myuser/dist ./dist

# Next, copy the remaining files and directories with the source code.

# Since we do this after NPM install, quick build will be really fast

# for most source file changes.

COPY --chown=myuser . ./

# Run the image. If you know you won't need headful browsers,

# you can remove the XVFB start script for a micro perf gain.

CMD ./start_xvfb_and_run_cmd.sh && npm run start:prod --silent

# Specify the base Docker image. You can read more about

# the available images at https://crawlee.dev/docs/guides/docker-images

# You can also use any other image from Docker Hub.

FROM apify/actor-node-playwright-firefox:16 AS builder

# Copy just package.json and package-lock.json

# to speed up the build using Docker layer cache.

COPY --chown=myuser package*.json ./

# Install all dependencies. Don't audit to speed up the installation.

RUN npm install --include=dev --audit=false

# Next, copy the source files using the user set

# in the base image.

COPY --chown=myuser . ./

# Install all dependencies and build the project.

# Don't audit to speed up the installation.

RUN npm run build

# Create final image

FROM apify/actor-node-playwright-firefox:16

# Copy just package.json and package-lock.json

# to speed up the build using Docker layer cache.

COPY --chown=myuser package*.json ./

# Install NPM packages, skip optional and development dependencies to

# keep the image small. Avoid logging too much and print the dependency

# tree for debugging

RUN npm --quiet set progress=false \

&& npm install --omit=dev --omit=optional \

&& echo "Installed NPM packages:" \

&& (npm list --omit=dev --all || true) \

&& echo "Node.js version:" \

&& node --version \

&& echo "NPM version:" \

&& npm --version \

&& rm -r ~/.npm

# Copy built JS files from builder image

COPY --from=builder --chown=myuser /home/myuser/dist ./dist

# Next, copy the remaining files and directories with the source code.

# Since we do this after NPM install, quick build will be really fast

# for most source file changes.

COPY --chown=myuser . ./

# Run the image. If you know you won't need headful browsers,

# you can remove the XVFB start script for a micro perf gain.

CMD ./start_xvfb_and_run_cmd.sh && npm run start:prod --silent

And maybe link to your runs on the platform?

optimistic-goldOP•3y ago

How can i get this link?

like-gold•3y ago

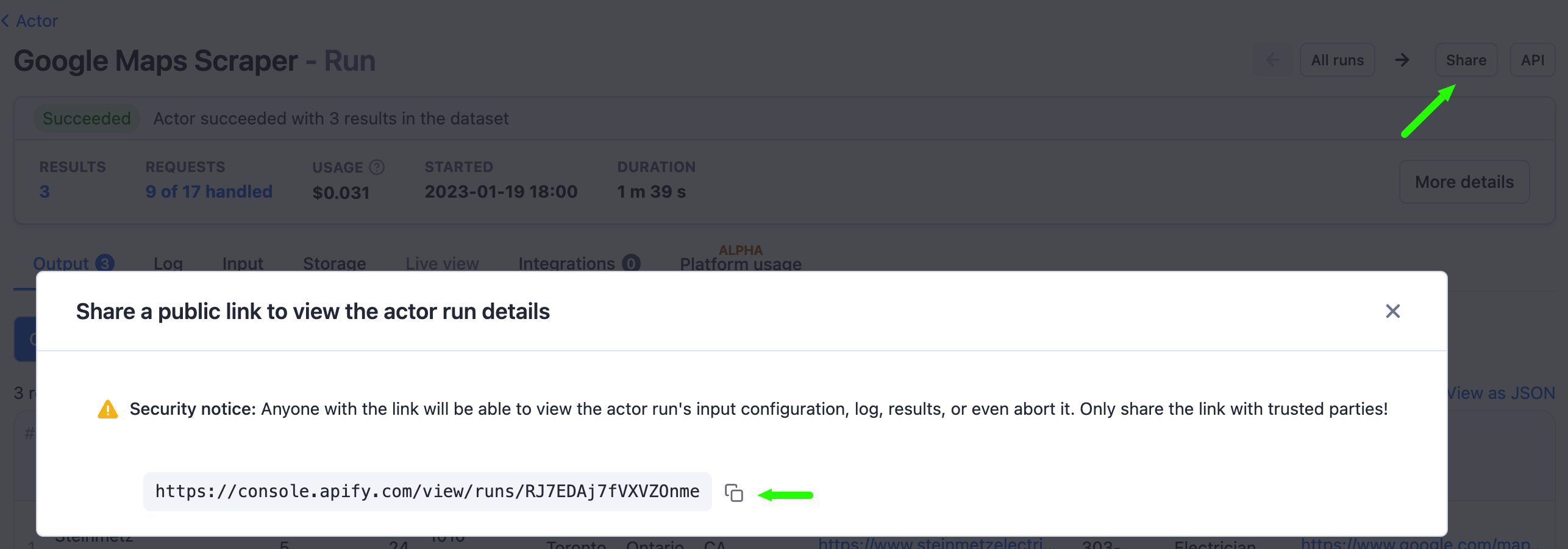

@Manuel Antunes here how you can get the run link:

optimistic-goldOP•3y ago

optimistic-goldOP•3y ago

I don't know how it can help

genetic-orange•2y ago

Any updates on this?