xenial-black•2y ago

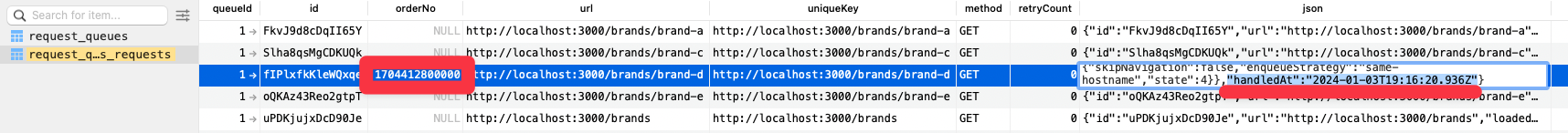

Workflow for manually reprocessing requests when using @apify/storage-local for SQLite Request Queue

Use case: I'm debugging a crawler. Majority of request handlers succeed, only few fail. I wanna fix/adjust the request handler logic; get from logs failed urls; open some SQLite editor; find those requests in request queue table and somehow mark them as unprocessed. Then rerun the crawler with

CRAWLEE_PURGE_ON_START=false so it only run the previously problematic urls. Iterate few times to catch all bugs, and then run the whole crawler with purged storage.

After lot of debugging/investigating Crawlee & @Apify/storage-local I've managed to figure out a working workflow, but it's kinda laborious:

* set row's orderNo to some future date in ms from epoch

* edit rows' json and remove handledAt property [2]

* run the crawler, which will re-add handledAt property

* delete row's orderNo (not sure why that is not done automatically)

That's kinda tedious, do you know of some better way? Or is there some out-of-the-approach for my usecase without hacking SQLite? I've found out this approach recommended by one-and-only @Lukas Krivka here 🙂 https://github.com/apify/crawlee/discussions/1232#discussioncomment-1625019

[1]

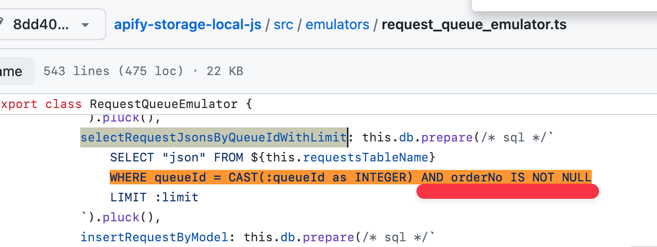

https://github.com/apify/apify-storage-local-js/blob/8dd40e88932097d2260f68f28412cc29ff894e0f/src/emulators/request_queue_emulator.ts#L341

[2]

https://github.com/apify/crawlee/blob/52b98e3e997680e352da5763b394750b19110953/packages/core/src/storages/request_queue.ts#L164GitHub

How can I mark requests in the queue as unprocessed? · apify crawle...

How can I make requests in the RequestQueueue queue available for re-processing? I am interested in how to do this both for the entire queue and for individual requests. Suppose the actor has proce...

GitHub

apify-storage-local-js/src/emulators/request_queue_emulator.ts at 8...

Local emulation of the apify-client NPM package, which enables local use of Apify SDK. - apify/apify-storage-local-js

GitHub

crawlee/packages/core/src/storages/request_queue.ts at 52b98e3e9976...

Crawlee—A web scraping and browser automation library for Node.js that helps you build reliable crawlers. Fast. - apify/crawlee

9 Replies

@strajk just advanced to level 2! Thanks for your contributions! 🎉

xenial-blackOP•2y ago

daringly taggin also @vladdy as SQLite specialist :)) (really nice work with the local storage adapter 🙏 )

extended-salmon•2y ago

Can't you just update the request with handledAt: null and forefront: true/false

👀👀

xenial-blackOP•2y ago

IIUC nope cause of this

xenial-blackOP•2y ago

It's not a huge issue, but it's kinda akward so I was wondering if I'm approaching it with wrong mental model 🙂

cant you just put those failed request to another queue and after start to have some logic that will use that queue if there is one and not empty?

extended-salmon•2y ago

Oof yeah that's... wack. Although updating shouldn't even hit that statement

Yeah no updating should let you set orderNo too

So calling storage.updateRequest should let you set orderNo and handledAt: null

xenial-blackOP•2y ago

For now, solved it in a very hacky, but convenient way :))

Thx @HonzaS for the idea

Crawler options

During my custom init

xenial-blackOP•2y ago

GitHub

Apify cli command to put failed requests back to queue · Issue #136...

Describe the feature There was a time in beta, handled and pending request in queue were in JSON format. If we wanted to retry some failed requests, we can simply put it back to pending request man...