harsh-harlequin•2y ago

How to instantiate 1 crawler, and run it with incoming incoming requests

So, I'm trying to understand what I'm doing architecturally wrong. I define a "crawler" using Playwright crawler. Then, I add some urls to the requestQueue and it runs fine. I can then load more into the crawler, and again call run and it works. But, in the case where my crawler will have a queue that is sending it new requests ongoing, i'm not sure how to architect this.

When you make it where each crawler receive a url, processes it, and closes down, it makes it impossible effectively to run things in parallel.

When you try to add things to the queue while the crawler is running (using addRequests), that seems to fail as well.

So, how do I architect this?

This is my example code for reference.

(I attempted to add the example code i'm using, but it was too long. So, here's a gist: https://gist.github.com/wflanagan/2ea9316db8d3173f5ad3fbde2443ca67)

21 Replies

xenial-black•2y ago

Same issue, we are lacking a good approach

Probably this: https://docs.apify.com/academy/running-a-web-server - idea is to keep crawler running even when all requests are finished and provide a way to accept new request for processing by ExpressJS (or add your own way).

harsh-harlequinOP•2y ago

can you only do this on the Apifly playtform?

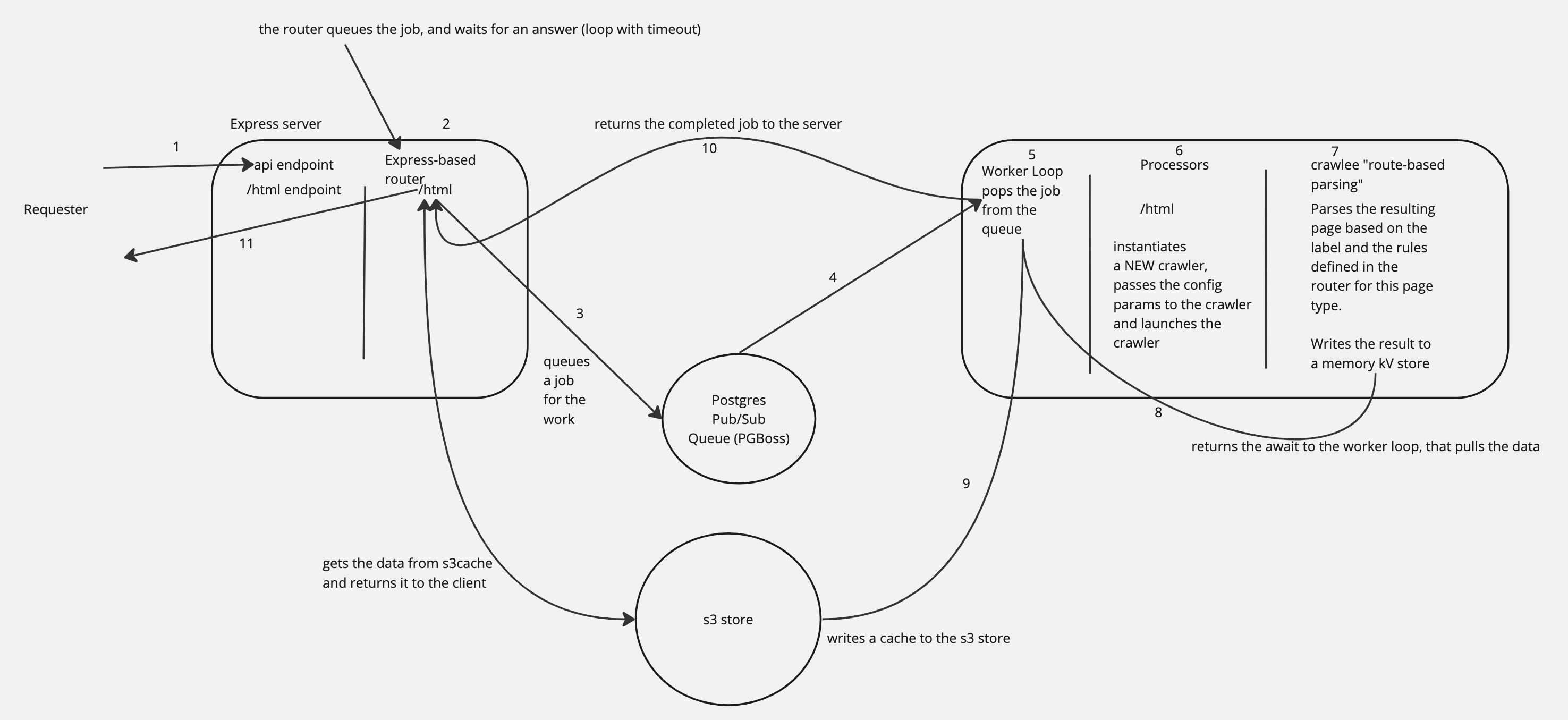

my current architecture has Express.. we push to a queue, that is picked up by multiple workers.

the worker take the job. and the job has been lalbeled to the right router.

then, the router performs the work, and pushes the results back, and we pas sit back, completing the job.

and that doesn't use crawlee at all? that example?

for crawlee "workers" logically is single crawler with auto-scaling, from your description sounds like you want to manage your own child instances, closest match to do it is named request queue, however there is no examples for such approach.

harsh-harlequinOP•2y ago

well, i don't.

ideally i spin up a single crawler. Then, its idling until i send it jobs to do, and it retrieves.

that's how i've tried ot configure it, using each single url spinning up a crawler, doing the 1 page, then shutting down the crawler.

here is a quick, 2 minute write up on what i'm doing

harsh-harlequinOP•2y ago

harsh-harlequinOP•2y ago

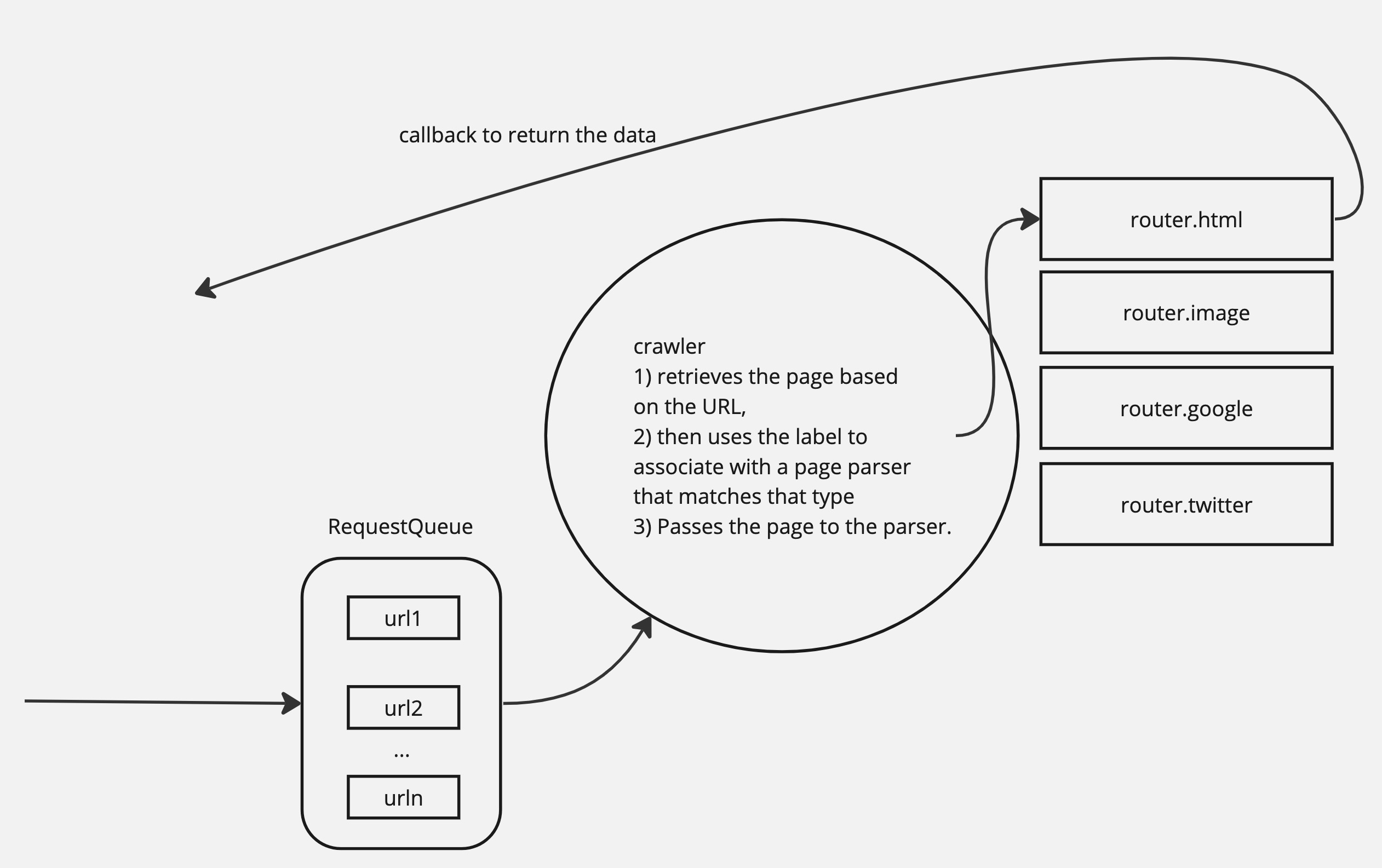

I went with Crawlee because I thought i'd be able to route to different parsers based on the URL (which I guess I can do).. but the problem seems to me that I can't queue additional items to the crawler to process when it completes a page.. its really designed for more of a crawler where you set it to the same site, and you scrape a website.. versus something where we are passing urls to it, and it retrieves and parses different jobs based on the url or config params themselves.. basically, once a crawler gets a set of URL requests, you can't add more requests until those are done, and the crawler stops running. at least as I understand it.

harsh-harlequinOP•2y ago

harsh-harlequinOP•2y ago

This is a pic of what I thought i was building..

but what i'm finding is that you set teh reequestqueue with 1 url.. then once the crawler is running, you can't queue anything until the crawler stopped running.

once the crawler has stopped.. you can add things to the request queue

@wflanagan just advanced to level 2! Thanks for your contributions! 🎉

harsh-harlequinOP•2y ago

but if you try to queue while the crawler is running.. by either crawler.addRequests or requeustQueue.addRequests, it throws an error and or doesn't accept the jobs, and never returns data.

i have tried makking that if, for example there are 5 "threads", I have created 5 crawlers to work in parallel, but it seem that make things confused. and it doesn't work well at all. things error, and timeout. So, 1 crawlee crawler instance is the right strategy.. but i can't figure out how to queue work for teh crawler instance and have it reliability perform the work.

I've done nothing all weekend but try different architectures for this.. and 0% have worked. So, clearly i'm doing something wrong.

Adding requests to the crawlers request queue while crawler is running is basic crawlee functionality. That certainly works and should not throw errors.

I am currently trying to develop a webscraping framework that has many of the features like you can push a task and it start processing the task(scrape data), push the data to pipelines(can be used to process or export the result to csv/json/database etc). The framework isn't complete as it still lacks many feature like html parsing, retries but eventually we will be there and it under development.

harsh-harlequinOP•2y ago

@gtry yeah I have some of it myself

@gtry would love some feedback if you're got the backend part of how you're managing crawler instances

@HonzaS It is.. here's the code.. it errors when calling run();

and the error is

and the error is

eager-peach•2y ago

@wflanagan not sure if this would be the correct approach, but one think I did was to actually check if the crawler is running already before calling the run again. Because afaik if the crawler is running and you add new URLs to it, it will process automatically, you only need to call the

run again if the crawler had stopped.

harsh-harlequinOP•2y ago

Yeah, i am working on that path now.. enabling the crawler to run..

i just wrote a sample, started the crawler, then queued 3 urls to it and it worked

heres the sample of this.. for posterity. I'm going to try reworking ot this approach and see how it does.

harsh-harlequinOP•2y ago

Gist

exmaple of runing the crawler, then adding requests to it.

exmaple of runing the crawler, then adding requests to it. - example.mjs

Actually the current framework is golang based. It has a scheduler and manages a pool of workers internally. So, I just push task and it processes as they come in and push the results down the pipelinemanger for transformation and exporting.

why would you call .run again? Did you try https://crawlee.dev/api/playwright-crawler/interface/PlaywrightCrawlerOptions#keepAlive ?

harsh-harlequinOP•2y ago

I have moved to that architecture

and that is improving things.. yes.

I think this is a cluster of problems.. for example. I think that proxy rotation isn't working well, and it's throwing timeouts on proxies.. I'm reaching out to the multiple proxy providers to see if there's a problem with attempting to keep connections alive, etc.

@wflanagan just advanced to level 3! Thanks for your contributions! 🎉