foreign-sapphire•2y ago

How to reset crawlee URL cache/add the same URL back to the requestQueue?

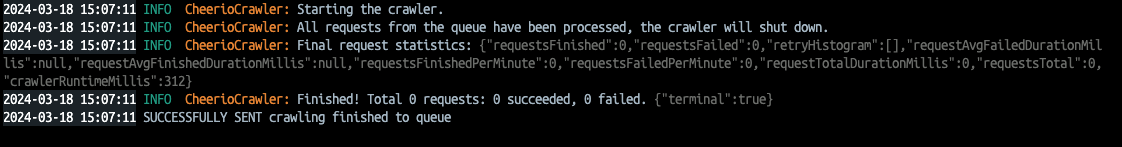

I have a CheerioCrawler that runs in a docker container that is listening to incoming messages(each message is a url) from RabbitMQ from the same Queue. The crawler runs and finishes the first crawling job successfully. However, if it receives the second message(url) which would be the same as the first one, it just outputs the message in the screenshot i've attached. Basically, it doesn't add the second message/url correctly to the crawler's queue. What would be a solution for this? I have thought about restarting the crawler or emptying the kv storage, but i can't seem to get it working. in my crawlerConfigs, i am setting 'purgeOnStart' and 'persistStorage' to false.

My code looks something like this:

const crawler = new CheerioCrawler({ async requestHandler ({$, request, enqueueLinks}) {

await enqueueLinks({

transformationFunction(req){

strategy: 'same-domain'

}

})

}

}, crawlerConfigs,)

await crawler.addRequests(websiteUrls);

await crawler.run();

6 Replies

stormy-gold•2y ago

Am I understanding correctly that the second message is the same URL? So essentially the crawler is running and you crawl example.com/images/index.html and then later while the crawler is still running you begin a crawl to the exact same url? If so, you'll need to pass a unique key. The default unique key is the URL... So if the second crawl is the same url it is not considered unique ( unless you override unique key ). Hope that helps.

@gmmmer just advanced to level 1! Thanks for your contributions! 🎉

foreign-sapphireOP•2y ago

yes, the second message is the same URL. the difference is that i am sending the second message only after the first "crawling job" is done. After that, i want to be able to begin a crawl to the same exact initial url. But i think it gets skipped because its already stored inside the crawler's local storage. is there any way i can just reset this storage after each crawling job on an initial url is done? even restarting the crawler(or manually ending with a line of code the crawler.run() function) would be fine, but crawler.teardown() seems to not be helping in my situation.

absent-sapphire•2y ago

@mesca4046 You are probably using a named queue which doesn't get cleared automatically or with crawler.teardown, use an unnamed queue, or drop it after each run

foreign-sapphireOP•2y ago

@Hamza i am definitely not using a named queue. any advice about how i might drop it after each run?

absent-sapphire•2y ago

Try setting purgeOnStart to true