underlying-yellow•2y ago

TargetClosedError

👋

Im always getting error after 5 minutes of start scraping(works normally until it gets error)

12 Replies

underlying-yellowOP•2y ago

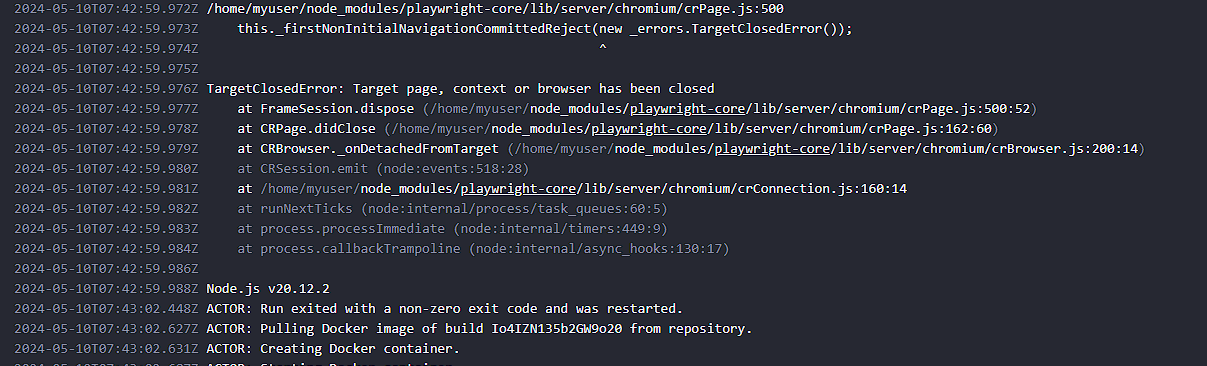

error:

5 minutes of crawling -> this error (im not doing anything when crawler runs bcs my cpu usage is high)

fixed, for others facing this issue:

its autoscaling issue.

best solution i found is using pm2 for autorestarting on error, queue must be saved then on restart it will continue from error moment

hey, which version of crawlee are you using?

I think I have the similar problem

after some time ( not always 5 minutes )the playwright crawler throws error that kills whole run

I have set restartOnError as you can see in the log, but it is far from ideal

hey, the team knows about this issue, in the meantime you could downgrade to 3.8.2

@lemurio I have now

"crawlee": "^3.5.4", in package.json when I have tried this "crawlee": "3.8.2", I get this WARN PlaywrightCrawler:AutoscaledPool:Snapshotter: Memory is critically overloaded. Using 2646 MB of 977 MB (271%). Consider increasing available memory. in log and concurrency goes to 1This can also be related that to the fact that if you don't close pages manually the handlers are keeping them for a very loooong time

@NeoNomade so you have

await page.close() at the end of every handler function?Absolutely

Thank you a lot! This really solved the problem. Not even one

TargetClosedError anymore.

So it looks like it is a bug in the crawlee library that it does not close pages fast enough.It seems like crawlee dev team doesn't accept many things

I also proposed images to be with Alpine since they are safer and a lot smaller. But they keep using debian

Hey, sorry for the bug.

can you please open a bug report with explanation of it here: https://github.com/apify/crawlee/issues/new?assignees=&labels=bug&projects=&template=bug_report.yml

I will take it to the team, thanks!

GitHub

Build software better, together

GitHub is where people build software. More than 100 million people use GitHub to discover, fork, and contribute to over 420 million projects.

Hello, the

this._firstNonInitialNavigationCommittedReject(new _errors.TargetClosedError() error is finally fixed in latest Crawlee. see https://github.com/apify/crawlee/releases/tag/v3.10.3GitHub

Release v3.10.3 · apify/crawlee

3.10.3 (2024-06-07)

Bug Fixes

adaptive-crawler: log only once for the committed request handler execution (#2524) (533bd3f)

increase timeout for retiring inactive browsers (#2523) (195f176)

respec...