ambitious-aqua•2y ago

{"time":"2024-05-20T03:04:41.809Z","level":"WARNING","msg":"PuppeteerCrawler:AutoscaledPool:Snapshot

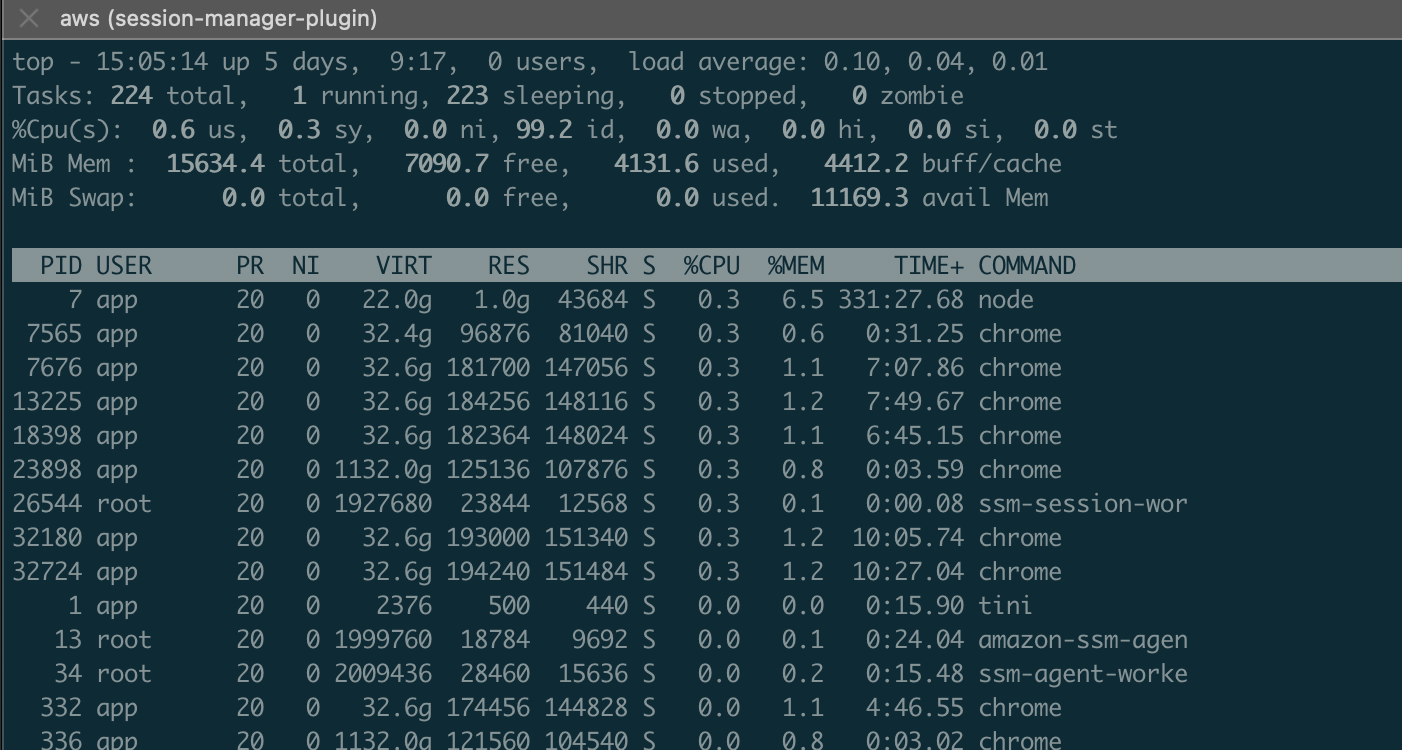

This error is happening consistently, even while only running 1 browser. When I load up the server and look at

top. There are a bunch of long-running chrome processes that haven't been killed.

top attached.:

Error:

10 Replies

ambitious-aquaOP•2y ago

That top is with zero browsers currently running.

cc @NeoNomade @microworlds

@bmax to debug this, the routes would be needed

For example even if you have await page.close at the end of each handler, but you have some process in the handler that hangs. It can lead to this .

It's hard to debug without the content of the routes

like-gold•2y ago

@bmax I see you're using node js. I would suggest that you kill all active running browsers/child-processes (

page.close() and browser.close() are not enough especially when the script hangs).

When you launch a browser, get it's process id (browser.pid) and manually kill that process when you're done with the browser. You can use this library - https://www.npmjs.com/package/tree-kill. So instead of browser.close(), do:

Use with caution though, only kill the process when you completely don't need the browser 😅like-gold•2y ago

Another option is to use a very obselete library https://github.com/thomasdondorf/puppeteer-cluster. You can control the cuncurrency and it efficiently manages all the browers/pages running on the server. See example of running an express server with browsers on it - https://github.com/thomasdondorf/puppeteer-cluster/blob/master/examples/express-screenshot.js 👍

PS: this library is not maintained but for the most part, it gets the job done. 😀

GitHub

puppeteer-cluster/examples/express-screenshot.js at master · thomas...

Puppeteer Pool, run a cluster of instances in parallel - thomasdondorf/puppeteer-cluster

ambitious-aquaOP•2y ago

zzz

💤

@microworlds thanks for checking. How do you get the browser.pid from the BrowserPool within crawlee?

This solution is against the Browser pool 🤣

ambitious-aquaOP•2y ago

lmao -- I agree. I'm thinking crawlee should help manage this

but gotta do what you gotta do.

I'm 100% sure the issue lays in routes. I crawled 10 million urls with a single crawler without this issue 🤣

But tweaked the routes to be as memory efficient as possible

like-gold•2y ago

Ah I see. Non of the examples I gave above uses Crawlee. Probably not suitable for your use case but I've been using this in several actors (that run on a VPS) in production.

But as you're using Crawlee, then I'd recommend lowering the concurrency, until you find the OPTIMAL performance settings. This will allow Crawlee gracefully handle the browsers regardless of spawned instance.

The pages are probably super heavy so the Crawlee concurrency is not able to keep up the memory under the limit. Maybe you could slow down the scaling. If you want to dig in, it would be better to send a reproduction to Crawlee GitHub issue. Ideally the log with how the current and desired concurrency is changing.