Using browser_new_context_options with PlaywrightBrowserPlugin

Hello,

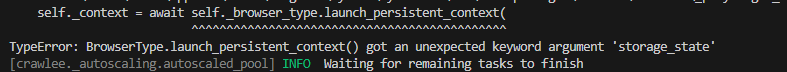

I'm very confused on how to use browser_new_context_options because the error code that i get implies that storage_state is not a variable that Playwright's new_context call supports. However on the playwright docs, storage_state seems to support uploading cookies as a dictionary, and when I try running playwright by itself and uploading cookies through this method, everything works fine.

A lot of the cookie related questions in this forum seems to be before the msot recent build, so I was wondering what the syntax should be to properly load cookies through the PlaywrightBrowserPlugin class, as everything else seems to work just fine once that's sorted.

TLDR: what's the syntax for using browser_new_context_options so that it can load a cookie that's set up as a dictionary?

Solution:Jump to solution

figured out the issue in dms, for anyone looking up this sort of issue in the future, you'd want to have something akin to this script

some lines of interest are:

```

"expires": int(parts[4]) if parts[4] else None, #type: ignore

"http_only": parts[3].lower() == 'true',...

12 Replies

Hello @Glitchy_mess, could you please provide more complete code example? This will allow us to test it on our side.

Hey @Glitchy_mess

Yes, it can indeed be a bit confusing.

PlaywrightCrawler in basic mode uses launch_persistent_context, which has no storage_state parameter.

The standard BrowserContext is only used when the use_incognito_page flag is set.oh for sure, here's a rough script (although most of it is just lifted from the "setting up" guide that crawlee has for the playwright crawler)

i also messed around with using SessionCookies and the PlaywrightPreNavCrawlingContext, but those didn't really lead anywhere

oh ok that's interesting, adding the flag does make the request go through without any errors, but for some reason the cookies itself don't seem to register since i'm still set on the sign in screen for the website i'm crawling

(if it matters, it's this page https://sellercentral.amazon.ca/help/hub/reference/G200164490, afaik they don't have any anti-scraping things in place since playwright is able to load the cookies just fine)

PlaywrightPreNavCrawlingContext, but those didn't really lead anywhereIf you used

dummyCookie, they didn't install because instantly expire.

Playwright use -1 for cookies that should not expire

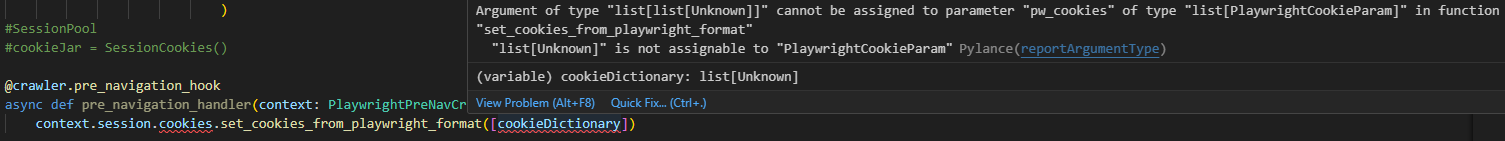

Correctly set cookies before navigationIf you used dummyCookie, they didn't install because instantly expireoh didn't know that, i just used 1 since it was supposed to be a dummy var but in practice the cookies i'm trying to pass have a duration of ~1,700,000,000 as for using the pre navigation hook, when running this error gets thrown: i think the ValueError is referring to "signin-sso-state-ca" as the element #0, but that's 19 characters long, and i don't know where you'd find the the 2 length element that playwright is looking for there also i added two errors that pylance is throwing. I'm not sure what either mean in this context , but i don't think pylance really knows how to handle crawlee so it might be nothing?

duration of ~1,700,000,000This should be the timestamp of some point in the future, example - 1751042637

ValueError: dictionary update sequence element #0 has length 6; 2 is requiredThis is very strange

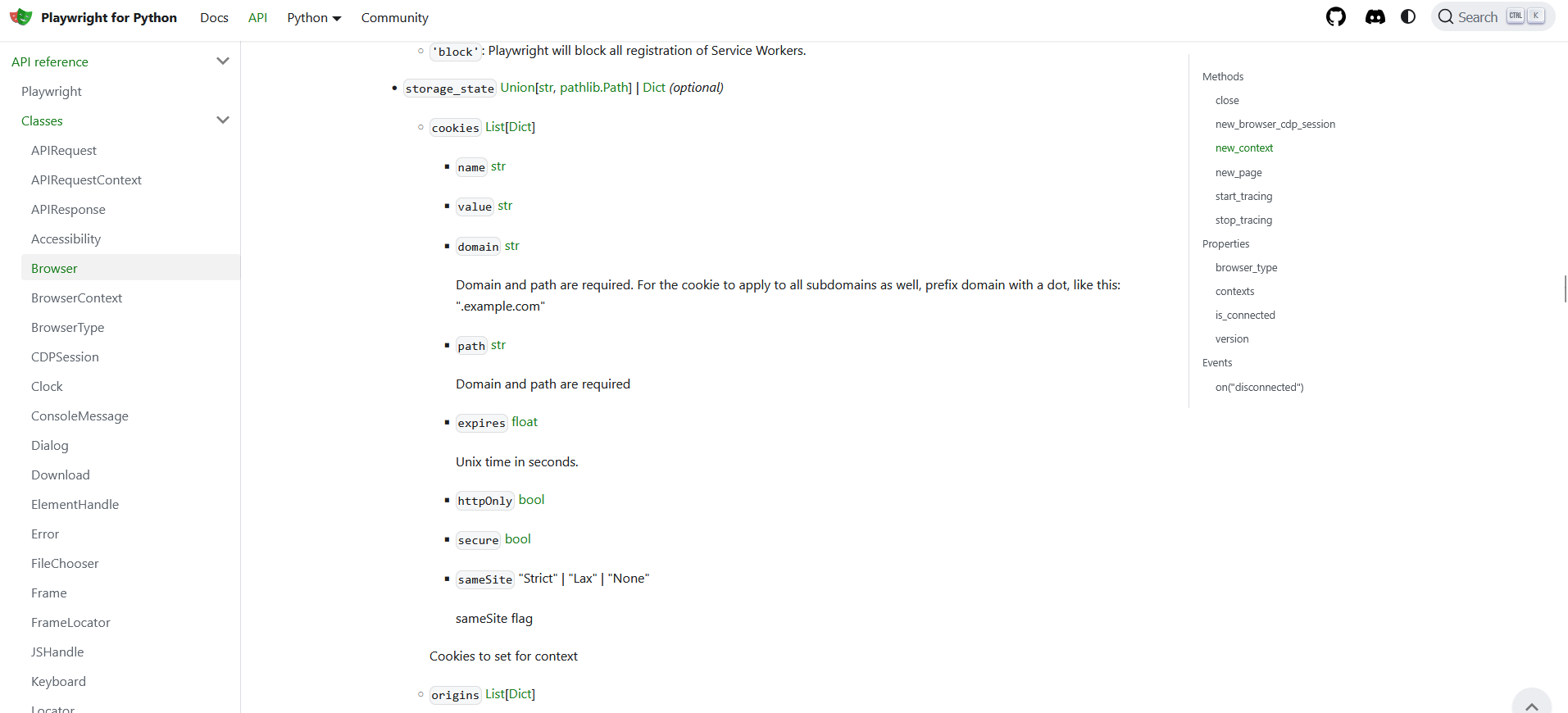

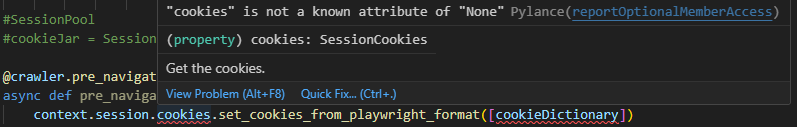

also i added two errors that pylance is throwing. I'm not sure what either mean in this context , but i don't think pylance really knows how to handle crawlee so it might be nothing?session is red, because session can be

None and pylance wait

is due to the fact that the set_cookies_from_playwright_format method is used mostly inside the library and is typed via TypedDict. But this typing is not available through public imports

This should be the timestamp of some point in the future, example - 1751042637Just make sure you don't try to set a cookie that expired for example an hour ago 😅

session is red, because session can be None and pylance waityeah adding the conditional fixed that, sweet, and i take we can just ignore the error then since the typing isn't available from public imports

don't try to set a cookie that expired an hour agooh true true, give me a sec to see if clearing the cookies and cache and resigning in (which should hopefully refresh cookies?) helps ok yeah so its not the cookies expiring since i'm getting the same ValueError

@Glitchy_mess just advanced to level 1! Thanks for your contributions! 🎉

error then since the typing isn't available from public importsTyping for

session.cookies.set_cookies is available, but its format is slightly different from Playwright's.

Will clear a typing error

ok yeah so its not the cookies expiring since i'm getting the same ValueErrorIt's hard to diagnose where the error occurred. If you feel free, you can message me in DM. So we can look at the actual data causing the error.

oh yeah i'm down for that

sent a friend request for that

Solution

figured out the issue in dms, for anyone looking up this sort of issue in the future, you'd want to have something akin to this script

some lines of interest are:

where you want to clean up the expires and http_only fields so that they don't conflict with playwright's formatting, and "secure" ensures that any cookies that start with a are formatted so that they are https only, as opposed to just looking for http, since playwright doesn't like the

beyond that, lines 38-44 are useful for logging whether a cookie has failed to load or not

also also major props to Mantisus for writing the script itself, i'm just dropping it here before closing the thread